diff --git a/LICENSE b/LICENSE

new file mode 100755

index 00000000..d75f0ee8

--- /dev/null

+++ b/LICENSE

@@ -0,0 +1,58 @@

+Copyright (c) 2017, Jun-Yan Zhu and Taesung Park

+All rights reserved.

+

+Redistribution and use in source and binary forms, with or without

+modification, are permitted provided that the following conditions are met:

+

+* Redistributions of source code must retain the above copyright notice, this

+ list of conditions and the following disclaimer.

+

+* Redistributions in binary form must reproduce the above copyright notice,

+ this list of conditions and the following disclaimer in the documentation

+ and/or other materials provided with the distribution.

+

+THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

+AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

+IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

+DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE

+FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

+DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

+SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

+CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

+OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

+OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

+

+

+--------------------------- LICENSE FOR pix2pix --------------------------------

+BSD License

+

+For pix2pix software

+Copyright (c) 2016, Phillip Isola and Jun-Yan Zhu

+All rights reserved.

+

+Redistribution and use in source and binary forms, with or without

+modification, are permitted provided that the following conditions are met:

+

+* Redistributions of source code must retain the above copyright notice, this

+ list of conditions and the following disclaimer.

+

+* Redistributions in binary form must reproduce the above copyright notice,

+ this list of conditions and the following disclaimer in the documentation

+ and/or other materials provided with the distribution.

+

+----------------------------- LICENSE FOR DCGAN --------------------------------

+BSD License

+

+For dcgan.torch software

+

+Copyright (c) 2015, Facebook, Inc. All rights reserved.

+

+Redistribution and use in source and binary forms, with or without modification, are permitted provided that the following conditions are met:

+

+Redistributions of source code must retain the above copyright notice, this list of conditions and the following disclaimer.

+

+Redistributions in binary form must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution.

+

+Neither the name Facebook nor the names of its contributors may be used to endorse or promote products derived from this software without specific prior written permission.

+

+THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

diff --git a/README.md b/README.md

new file mode 100755

index 00000000..0b20c06f

--- /dev/null

+++ b/README.md

@@ -0,0 +1,214 @@

+ +

+

+

+

+

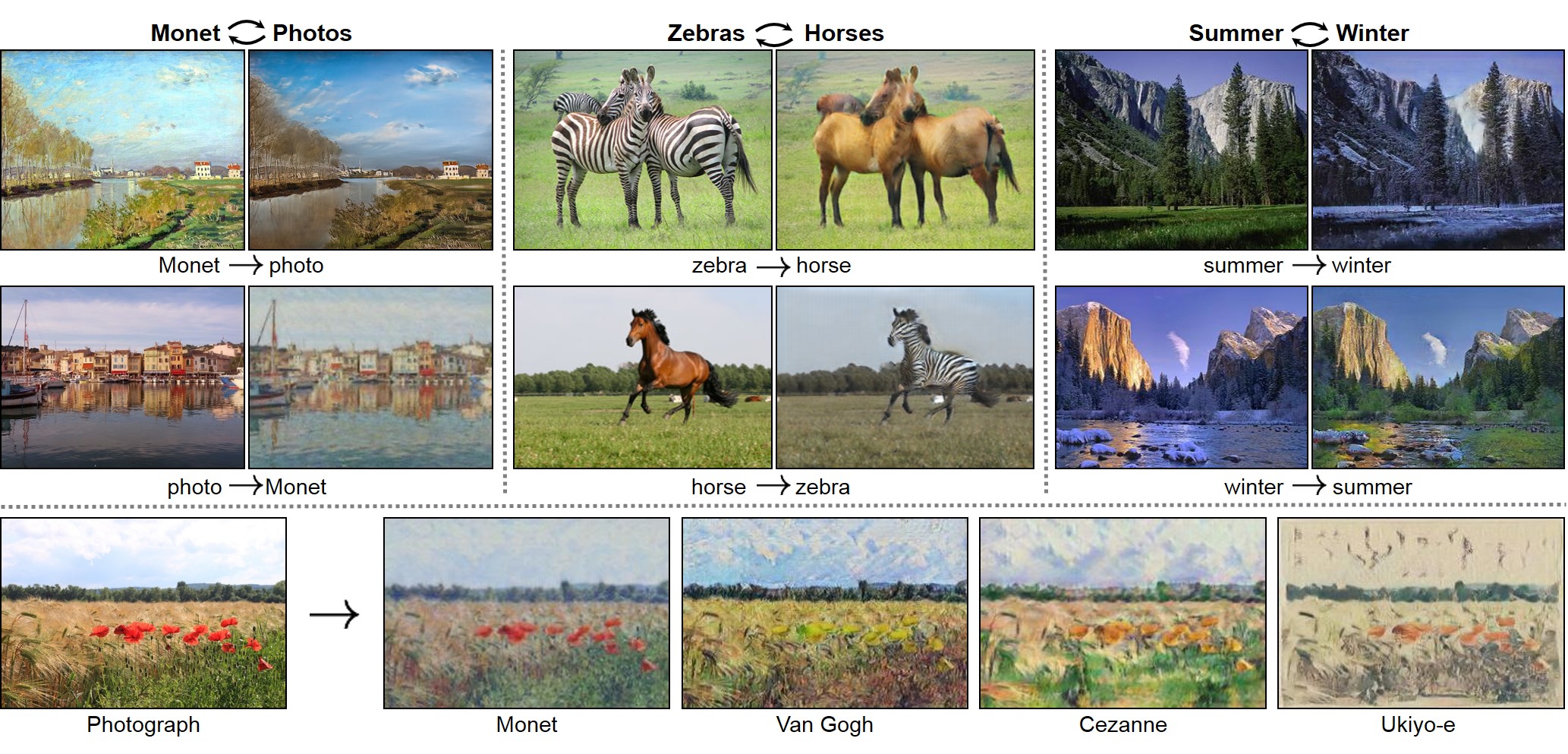

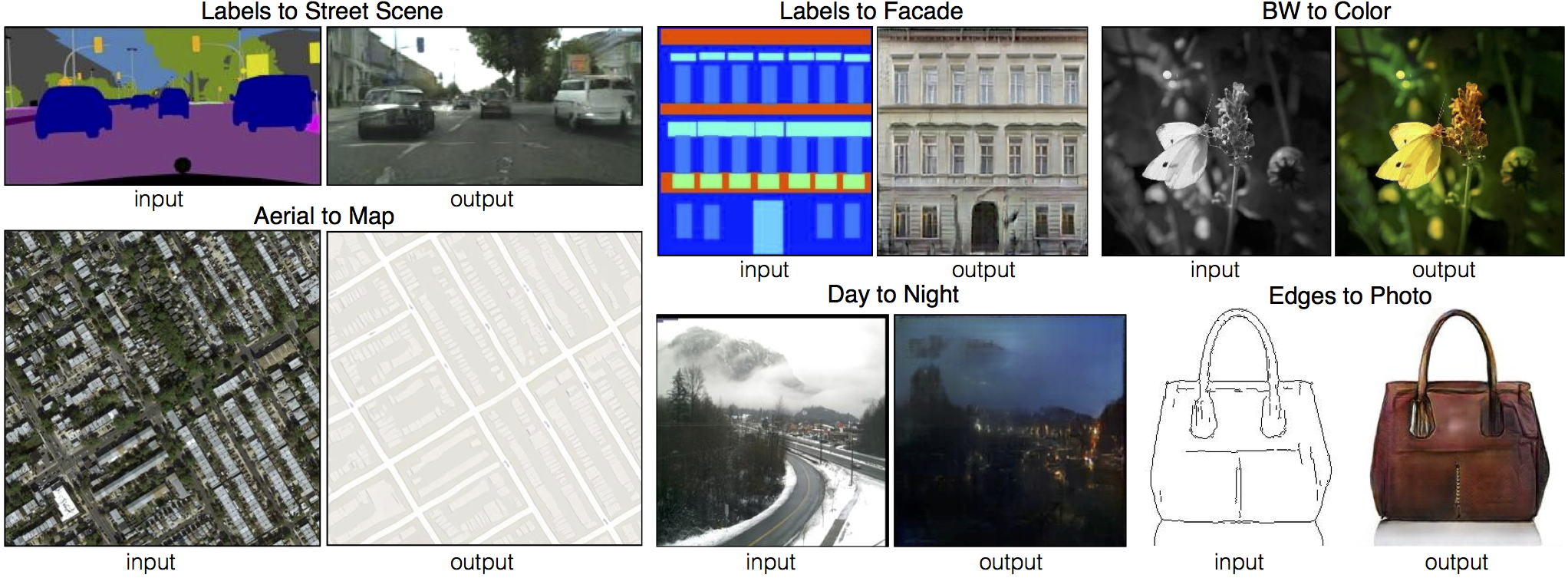

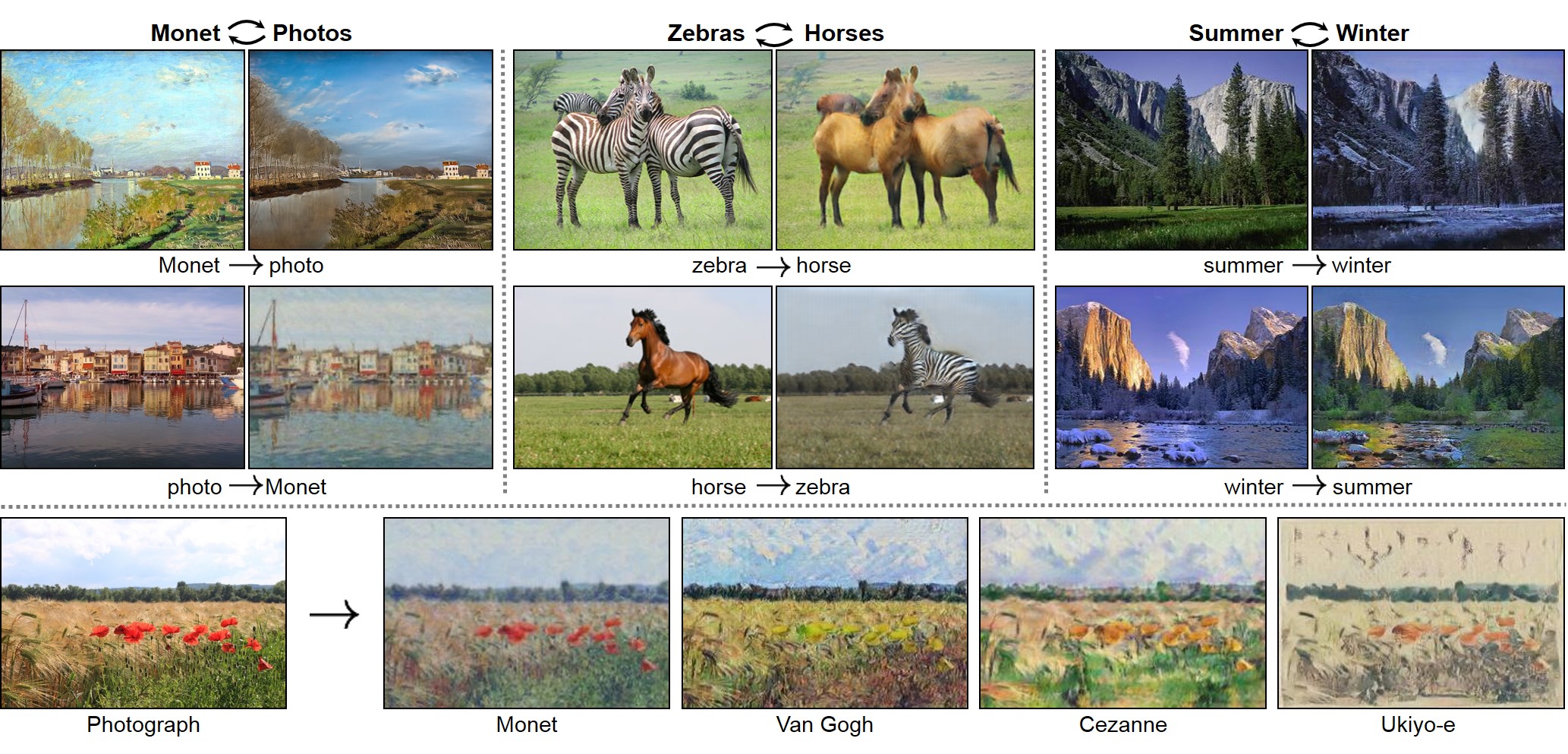

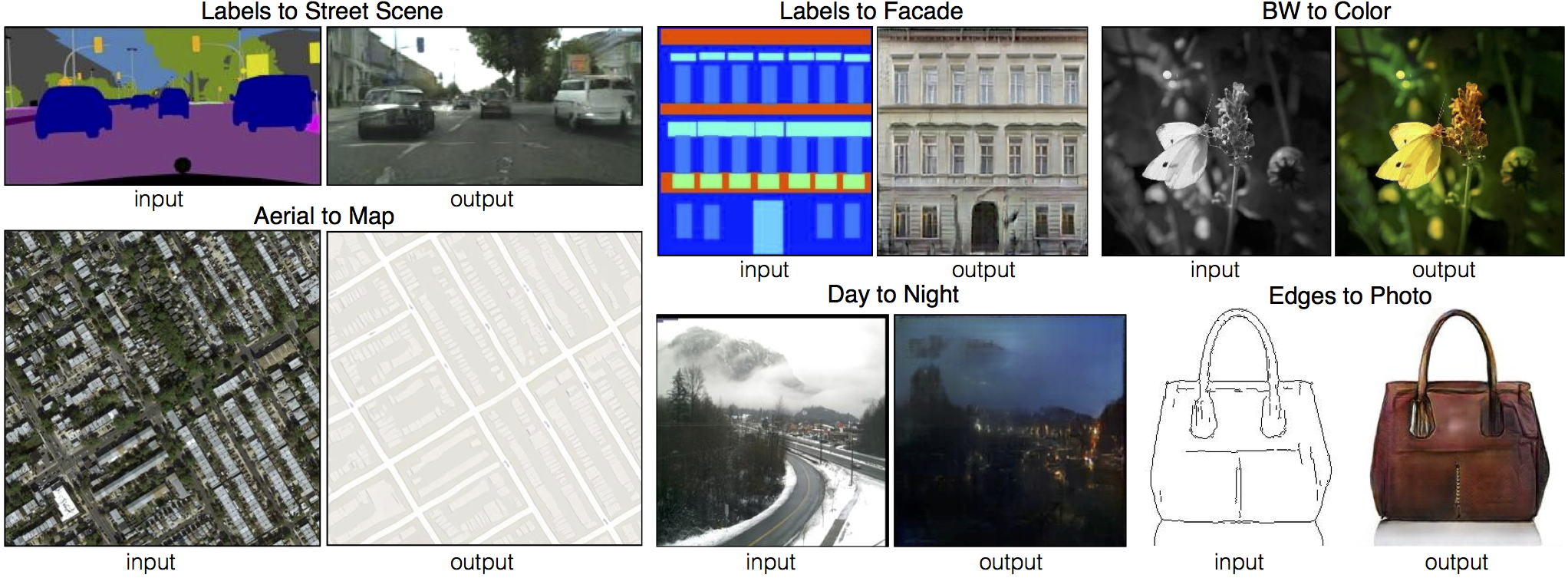

+# CycleGAN and pix2pix in PyTorch

+

+We provide PyTorch implementations for both unpaired and paired image-to-image translation.

+

+The code was written by [Jun-Yan Zhu](https://github.com/junyanz) and [Taesung Park](https://github.com/taesung89), and supported by [Tongzhou Wang](https://ssnl.github.io/).

+

+This PyTorch implementation produces results comparable to or better than our original Torch software. If you would like to reproduce the same results as in the papers, check out the original [CycleGAN Torch](https://github.com/junyanz/CycleGAN) and [pix2pix Torch](https://github.com/phillipi/pix2pix) code

+

+**Note**: The current software works well with PyTorch 0.4+. Check out the older [branch](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/tree/pytorch0.3.1) that supports PyTorch 0.1-0.3.

+

+You may find useful information in [training/test tips](docs/tips.md) and [frequently asked questions](docs/qa.md).

+

+**CycleGAN: [Project](https://junyanz.github.io/CycleGAN/) | [Paper](https://arxiv.org/pdf/1703.10593.pdf) | [Torch](https://github.com/junyanz/CycleGAN)**

+ +

+

+**Pix2pix: [Project](https://phillipi.github.io/pix2pix/) | [Paper](https://arxiv.org/pdf/1611.07004.pdf) | [Torch](https://github.com/phillipi/pix2pix)**

+

+

+

+

+**Pix2pix: [Project](https://phillipi.github.io/pix2pix/) | [Paper](https://arxiv.org/pdf/1611.07004.pdf) | [Torch](https://github.com/phillipi/pix2pix)**

+

+ +

+

+**[EdgesCats Demo](https://affinelayer.com/pixsrv/) | [pix2pix-tensorflow](https://github.com/affinelayer/pix2pix-tensorflow) | by [Christopher Hesse](https://twitter.com/christophrhesse)**

+

+

+

+

+**[EdgesCats Demo](https://affinelayer.com/pixsrv/) | [pix2pix-tensorflow](https://github.com/affinelayer/pix2pix-tensorflow) | by [Christopher Hesse](https://twitter.com/christophrhesse)**

+

+ +

+If you use this code for your research, please cite:

+

+Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks

+[Jun-Yan Zhu](https://people.eecs.berkeley.edu/~junyanz/)\*, [Taesung Park](https://taesung.me/)\*, [Phillip Isola](https://people.eecs.berkeley.edu/~isola/), [Alexei A. Efros](https://people.eecs.berkeley.edu/~efros)

+In ICCV 2017. (* equal contributions) [[Bibtex]](https://junyanz.github.io/CycleGAN/CycleGAN.txt)

+

+

+Image-to-Image Translation with Conditional Adversarial Networks

+[Phillip Isola](https://people.eecs.berkeley.edu/~isola), [Jun-Yan Zhu](https://people.eecs.berkeley.edu/~junyanz), [Tinghui Zhou](https://people.eecs.berkeley.edu/~tinghuiz), [Alexei A. Efros](https://people.eecs.berkeley.edu/~efros)

+In CVPR 2017. [[Bibtex]](http://people.csail.mit.edu/junyanz/projects/pix2pix/pix2pix.bib)

+

+## Course

+CycleGAN course assignment [code](http://www.cs.toronto.edu/~rgrosse/courses/csc321_2018/assignments/a4-code.zip) and [handout](http://www.cs.toronto.edu/~rgrosse/courses/csc321_2018/assignments/a4-handout.pdf) designed by Prof. [Roger Grosse](http://www.cs.toronto.edu/~rgrosse/) for [CSC321](http://www.cs.toronto.edu/~rgrosse/courses/csc321_2018/) "Intro to Neural Networks and Machine Learning" at University of Toronto. Please contact the instructor if you would like to adopt it in your course.

+

+## Other implementations

+### CycleGAN

+

+

+If you use this code for your research, please cite:

+

+Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks

+[Jun-Yan Zhu](https://people.eecs.berkeley.edu/~junyanz/)\*, [Taesung Park](https://taesung.me/)\*, [Phillip Isola](https://people.eecs.berkeley.edu/~isola/), [Alexei A. Efros](https://people.eecs.berkeley.edu/~efros)

+In ICCV 2017. (* equal contributions) [[Bibtex]](https://junyanz.github.io/CycleGAN/CycleGAN.txt)

+

+

+Image-to-Image Translation with Conditional Adversarial Networks

+[Phillip Isola](https://people.eecs.berkeley.edu/~isola), [Jun-Yan Zhu](https://people.eecs.berkeley.edu/~junyanz), [Tinghui Zhou](https://people.eecs.berkeley.edu/~tinghuiz), [Alexei A. Efros](https://people.eecs.berkeley.edu/~efros)

+In CVPR 2017. [[Bibtex]](http://people.csail.mit.edu/junyanz/projects/pix2pix/pix2pix.bib)

+

+## Course

+CycleGAN course assignment [code](http://www.cs.toronto.edu/~rgrosse/courses/csc321_2018/assignments/a4-code.zip) and [handout](http://www.cs.toronto.edu/~rgrosse/courses/csc321_2018/assignments/a4-handout.pdf) designed by Prof. [Roger Grosse](http://www.cs.toronto.edu/~rgrosse/) for [CSC321](http://www.cs.toronto.edu/~rgrosse/courses/csc321_2018/) "Intro to Neural Networks and Machine Learning" at University of Toronto. Please contact the instructor if you would like to adopt it in your course.

+

+## Other implementations

+### CycleGAN

+ [Tensorflow] (by Harry Yang),

+[Tensorflow] (by Archit Rathore),

+[Tensorflow] (by Van Huy),

+[Tensorflow] (by Xiaowei Hu),

+ [Tensorflow-simple] (by Zhenliang He),

+ [TensorLayer] (by luoxier),

+[Chainer] (by Yanghua Jin),

+[Minimal PyTorch] (by yunjey),

+[Mxnet] (by Ldpe2G),

+[lasagne/keras] (by tjwei)

+

+

+### pix2pix

+ [Tensorflow] (by Christopher Hesse),

+[Tensorflow] (by Eyyüb Sariu),

+ [Tensorflow (face2face)] (by Dat Tran),

+ [Tensorflow (film)] (by Arthur Juliani),

+[Tensorflow (zi2zi)] (by Yuchen Tian),

+[Chainer] (by mattya),

+[tf/torch/keras/lasagne] (by tjwei),

+[Pytorch] (by taey16)

+

+

+

+## Prerequisites

+- Linux or macOS

+- Python 2 or 3

+- CPU or NVIDIA GPU + CUDA CuDNN

+

+## Getting Started

+### Installation

+

+- Clone this repo:

+```bash

+git clone https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix

+cd pytorch-CycleGAN-and-pix2pix

+```

+

+- Install PyTorch 0.4+ and torchvision from http://pytorch.org and other dependencies (e.g., [visdom](https://github.com/facebookresearch/visdom) and [dominate](https://github.com/Knio/dominate)). You can install all the dependencies by

+```bash

+pip install -r requirements.txt

+```

+

+- For Conda users, we include a script `./scripts/conda_deps.sh` to install PyTorch and other libraries.

+

+### CycleGAN train/test

+- Download a CycleGAN dataset (e.g. maps):

+```bash

+bash ./datasets/download_cyclegan_dataset.sh maps

+```

+- Train a model:

+```bash

+#!./scripts/train_cyclegan.sh

+python train.py --dataroot ./datasets/maps --name maps_cyclegan --model cycle_gan

+```

+- To view training results and loss plots, run `python -m visdom.server` and click the URL http://localhost:8097. To see more intermediate results, check out `./checkpoints/maps_cyclegan/web/index.html`

+- Test the model:

+```bash

+#!./scripts/test_cyclegan.sh

+python test.py --dataroot ./datasets/maps --name maps_cyclegan --model cycle_gan

+```

+The test results will be saved to a html file here: `./results/maps_cyclegan/latest_test/index.html`.

+

+### pix2pix train/test

+- Download a pix2pix dataset (e.g.facades):

+```bash

+bash ./datasets/download_pix2pix_dataset.sh facades

+```

+- Train a model:

+```bash

+#!./scripts/train_pix2pix.sh

+python train.py --dataroot ./datasets/facades --name facades_pix2pix --model pix2pix --direction BtoA

+```

+- To view training results and loss plots, run `python -m visdom.server` and click the URL http://localhost:8097. To see more intermediate results, check out `./checkpoints/facades_pix2pix/web/index.html`

+- Test the model (`bash ./scripts/test_pix2pix.sh`):

+```bash

+#!./scripts/test_pix2pix.sh

+python test.py --dataroot ./datasets/facades --name facades_pix2pix --model pix2pix --direction BtoA

+```

+The test results will be saved to a html file here: `./results/facades_pix2pix/test_latest/index.html`.

+

+You can find more scripts at `scripts` directory.

+

+### Apply a pre-trained model (CycleGAN)

+- You can download a pretrained model (e.g. horse2zebra) with the following script:

+```bash

+bash ./scripts/download_cyclegan_model.sh horse2zebra

+```

+The pretrained model is saved at `./checkpoints/{name}_pretrained/latest_net_G.pth`. Check [here](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/blob/master/scripts/download_cyclegan_model.sh#L3) for all the available CycleGAN models.

+- To test the model, you also need to download the horse2zebra dataset:

+```bash

+bash ./datasets/download_cyclegan_dataset.sh horse2zebra

+```

+

+- Then generate the results using

+```bash

+python test.py --dataroot datasets/horse2zebra/testA --name horse2zebra_pretrained --model test

+```

+The option `--model test` is used for generating results of CycleGAN only for one side. `python test.py --model cycle_gan` will require loading and generating results in both directions, which is sometimes unnecessary. The results will be saved at `./results/`. Use `--results_dir {directory_path_to_save_result}` to specify the results directory.

+

+- If you would like to apply a pre-trained model to a collection of input images (rather than image pairs), please use `--dataset_mode single` and `--model test` options. Here is a script to apply a model to Facade label maps (stored in the directory `facades/testB`).

+``` bash

+#!./scripts/test_single.sh

+python test.py --dataroot ./datasets/facades/testB/ --name {your_trained_model_name} --model test

+```

+You might want to specify `--netG` to match the generator architecture of the trained model.

+

+### Apply a pre-trained model (pix2pix)

+

+Download a pre-trained model with `./scripts/download_pix2pix_model.sh`.

+

+- Check [here](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/blob/master/scripts/download_pix2pix_model.sh#L3) for all the available pix2pix models. For example, if you would like to download label2photo model on the Facades dataset,

+```bash

+bash ./scripts/download_pix2pix_model.sh facades_label2photo

+```

+- Download the pix2pix facades datasets:

+```bash

+bash ./datasets/download_pix2pix_dataset.sh facades

+```

+- Then generate the results using

+```bash

+python test.py --dataroot ./datasets/facades/ --direction BtoA --model pix2pix --name facades_label2photo_pretrained

+```

+Note that we specified `--direction BtoA` as Facades dataset's A to B direction is photos to labels.

+

+- See a list of currently available models at `./scripts/download_pix2pix_model.sh`

+

+## [Datasets](docs/datasets.md)

+Download pix2pix/CycleGAN datasets and create your own datasets.

+

+## [Training/Test Tips](docs/tips.md)

+Best practice for training and testing your models.

+

+## [Frequently Asked Questions](docs/qa.md)

+Before you post a new question, please first look at the above Q & A and existing GitHub issues.

+

+

+## Citation

+If you use this code for your research, please cite our papers.

+```

+@inproceedings{CycleGAN2017,

+ title={Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networkss},

+ author={Zhu, Jun-Yan and Park, Taesung and Isola, Phillip and Efros, Alexei A},

+ booktitle={Computer Vision (ICCV), 2017 IEEE International Conference on},

+ year={2017}

+}

+

+

+@inproceedings{isola2017image,

+ title={Image-to-Image Translation with Conditional Adversarial Networks},

+ author={Isola, Phillip and Zhu, Jun-Yan and Zhou, Tinghui and Efros, Alexei A},

+ booktitle={Computer Vision and Pattern Recognition (CVPR), 2017 IEEE Conference on},

+ year={2017}

+}

+```

+

+

+

+## Related Projects

+**[CycleGAN-Torch](https://github.com/junyanz/CycleGAN) |

+[pix2pix-Torch](https://github.com/phillipi/pix2pix) | [pix2pixHD](https://github.com/NVIDIA/pix2pixHD) |

+[iGAN](https://github.com/junyanz/iGAN) |

+[BicycleGAN](https://github.com/junyanz/BicycleGAN)**

+

+## Cat Paper Collection

+If you love cats, and love reading cool graphics, vision, and learning papers, please check out the Cat Paper [Collection](https://github.com/junyanz/CatPapers).

+

+## Acknowledgments

+Our code is inspired by [pytorch-DCGAN](https://github.com/pytorch/examples/tree/master/dcgan).

diff --git a/data/__init__.py b/data/__init__.py

new file mode 100755

index 00000000..8e695a7c

--- /dev/null

+++ b/data/__init__.py

@@ -0,0 +1,75 @@

+import importlib

+import torch.utils.data

+from data.base_data_loader import BaseDataLoader

+from data.base_dataset import BaseDataset

+

+

+def find_dataset_using_name(dataset_name):

+ # Given the option --dataset_mode [datasetname],

+ # the file "data/datasetname_dataset.py"

+ # will be imported.

+ dataset_filename = "data." + dataset_name + "_dataset"

+ datasetlib = importlib.import_module(dataset_filename)

+

+ # In the file, the class called DatasetNameDataset() will

+ # be instantiated. It has to be a subclass of BaseDataset,

+ # and it is case-insensitive.

+ dataset = None

+ target_dataset_name = dataset_name.replace('_', '') + 'dataset'

+ for name, cls in datasetlib.__dict__.items():

+ if name.lower() == target_dataset_name.lower() \

+ and issubclass(cls, BaseDataset):

+ dataset = cls

+

+ if dataset is None:

+ print("In %s.py, there should be a subclass of BaseDataset with class name that matches %s in lowercase." % (dataset_filename, target_dataset_name))

+ exit(0)

+

+ return dataset

+

+

+def get_option_setter(dataset_name):

+ dataset_class = find_dataset_using_name(dataset_name)

+ return dataset_class.modify_commandline_options

+

+

+def create_dataset(opt):

+ dataset = find_dataset_using_name(opt.dataset_mode)

+ instance = dataset()

+ instance.initialize(opt)

+ print("dataset [%s] was created" % (instance.name()))

+ return instance

+

+

+def CreateDataLoader(opt):

+ data_loader = CustomDatasetDataLoader()

+ data_loader.initialize(opt)

+ return data_loader

+

+

+# Wrapper class of Dataset class that performs

+# multi-threaded data loading

+class CustomDatasetDataLoader(BaseDataLoader):

+ def name(self):

+ return 'CustomDatasetDataLoader'

+

+ def initialize(self, opt):

+ BaseDataLoader.initialize(self, opt)

+ self.dataset = create_dataset(opt)

+ self.dataloader = torch.utils.data.DataLoader(

+ self.dataset,

+ batch_size=opt.batch_size,

+ shuffle=not opt.serial_batches,

+ num_workers=int(opt.num_threads))

+

+ def load_data(self):

+ return self

+

+ def __len__(self):

+ return min(len(self.dataset), self.opt.max_dataset_size)

+

+ def __iter__(self):

+ for i, data in enumerate(self.dataloader):

+ if i * self.opt.batch_size >= self.opt.max_dataset_size:

+ break

+ yield data

diff --git a/data/__init__.pyc b/data/__init__.pyc

new file mode 100755

index 00000000..15d7de5d

Binary files /dev/null and b/data/__init__.pyc differ

diff --git a/data/aligned_dataset.py b/data/aligned_dataset.py

new file mode 100755

index 00000000..9f460364

--- /dev/null

+++ b/data/aligned_dataset.py

@@ -0,0 +1,69 @@

+import os.path

+import random

+import torchvision.transforms as transforms

+import torch

+from data.base_dataset import BaseDataset

+from data.image_folder import make_dataset

+from PIL import Image

+

+

+class AlignedDataset(BaseDataset):

+ @staticmethod

+ def modify_commandline_options(parser, is_train):

+ return parser

+

+ def initialize(self, opt):

+ self.opt = opt

+ self.root = opt.dataroot

+ self.dir_AB = os.path.join(opt.dataroot, opt.phase)

+ self.AB_paths = sorted(make_dataset(self.dir_AB))

+ assert(opt.resize_or_crop == 'resize_and_crop')

+

+ def __getitem__(self, index):

+ AB_path = self.AB_paths[index]

+ AB = Image.open(AB_path).convert('RGB')

+ w, h = AB.size

+ assert(self.opt.loadSize >= self.opt.fineSize)

+ w2 = int(w / 2)

+ A = AB.crop((0, 0, w2, h)).resize((self.opt.loadSize, self.opt.loadSize), Image.BICUBIC)

+ B = AB.crop((w2, 0, w, h)).resize((self.opt.loadSize, self.opt.loadSize), Image.BICUBIC)

+ A = transforms.ToTensor()(A)

+ B = transforms.ToTensor()(B)

+ w_offset = random.randint(0, max(0, self.opt.loadSize - self.opt.fineSize - 1))

+ h_offset = random.randint(0, max(0, self.opt.loadSize - self.opt.fineSize - 1))

+

+ A = A[:, h_offset:h_offset + self.opt.fineSize, w_offset:w_offset + self.opt.fineSize]

+ B = B[:, h_offset:h_offset + self.opt.fineSize, w_offset:w_offset + self.opt.fineSize]

+

+ A = transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))(A)

+ B = transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))(B)

+

+ if self.opt.direction == 'BtoA':

+ input_nc = self.opt.output_nc

+ output_nc = self.opt.input_nc

+ else:

+ input_nc = self.opt.input_nc

+ output_nc = self.opt.output_nc

+

+ if (not self.opt.no_flip) and random.random() < 0.5:

+ idx = [i for i in range(A.size(2) - 1, -1, -1)]

+ idx = torch.LongTensor(idx)

+ A = A.index_select(2, idx)

+ B = B.index_select(2, idx)

+

+ if input_nc == 1: # RGB to gray

+ tmp = A[0, ...] * 0.299 + A[1, ...] * 0.587 + A[2, ...] * 0.114

+ A = tmp.unsqueeze(0)

+

+ if output_nc == 1: # RGB to gray

+ tmp = B[0, ...] * 0.299 + B[1, ...] * 0.587 + B[2, ...] * 0.114

+ B = tmp.unsqueeze(0)

+

+ return {'A': A, 'B': B,

+ 'A_paths': AB_path, 'B_paths': AB_path}

+

+ def __len__(self):

+ return len(self.AB_paths)

+

+ def name(self):

+ return 'AlignedDataset'

diff --git a/data/base_data_loader.py b/data/base_data_loader.py

new file mode 100755

index 00000000..ae5a1689

--- /dev/null

+++ b/data/base_data_loader.py

@@ -0,0 +1,10 @@

+class BaseDataLoader():

+ def __init__(self):

+ pass

+

+ def initialize(self, opt):

+ self.opt = opt

+ pass

+

+ def load_data():

+ return None

diff --git a/data/base_data_loader.pyc b/data/base_data_loader.pyc

new file mode 100755

index 00000000..ba42f466

Binary files /dev/null and b/data/base_data_loader.pyc differ

diff --git a/data/base_dataset.py b/data/base_dataset.py

new file mode 100755

index 00000000..e8b5e9ba

--- /dev/null

+++ b/data/base_dataset.py

@@ -0,0 +1,102 @@

+import torch.utils.data as data

+from PIL import Image

+import torchvision.transforms as transforms

+

+

+class BaseDataset(data.Dataset):

+ def __init__(self):

+ super(BaseDataset, self).__init__()

+

+ def name(self):

+ return 'BaseDataset'

+

+ @staticmethod

+ def modify_commandline_options(parser, is_train):

+ return parser

+

+ def initialize(self, opt):

+ pass

+

+ def __len__(self):

+ return 0

+

+

+def get_transform(opt):

+ transform_list = []

+ if opt.resize_or_crop == 'resize_and_crop':

+ osize = [opt.loadSize, opt.loadSize]

+ transform_list.append(transforms.Resize(osize, Image.BICUBIC))

+ transform_list.append(transforms.RandomCrop(opt.fineSize))

+ elif opt.resize_or_crop == 'crop':

+ transform_list.append(transforms.RandomCrop(opt.fineSize))

+ elif opt.resize_or_crop == 'scale_width':

+ transform_list.append(transforms.Lambda(

+ lambda img: __scale_width(img, opt.fineSize)))

+ elif opt.resize_or_crop == 'scale_width_and_crop':

+ transform_list.append(transforms.Lambda(

+ lambda img: __scale_width(img, opt.loadSize)))

+ transform_list.append(transforms.RandomCrop(opt.fineSize))

+ elif opt.resize_or_crop == 'none':

+ transform_list.append(transforms.Lambda(

+ lambda img: __adjust(img)))

+ else:

+ raise ValueError('--resize_or_crop %s is not a valid option.' % opt.resize_or_crop)

+

+ if opt.isTrain and not opt.no_flip:

+ transform_list.append(transforms.RandomHorizontalFlip())

+

+ transform_list += [transforms.ToTensor(),

+ transforms.Normalize((0.5, 0.5, 0.5),

+ (0.5, 0.5, 0.5))]

+ return transforms.Compose(transform_list)

+

+

+# just modify the width and height to be multiple of 4

+def __adjust(img):

+ ow, oh = img.size

+

+ # the size needs to be a multiple of this number,

+ # because going through generator network may change img size

+ # and eventually cause size mismatch error

+ mult = 4

+ if ow % mult == 0 and oh % mult == 0:

+ return img

+ w = (ow - 1) // mult

+ w = (w + 1) * mult

+ h = (oh - 1) // mult

+ h = (h + 1) * mult

+

+ if ow != w or oh != h:

+ __print_size_warning(ow, oh, w, h)

+

+ return img.resize((w, h), Image.BICUBIC)

+

+

+def __scale_width(img, target_width):

+ ow, oh = img.size

+

+ # the size needs to be a multiple of this number,

+ # because going through generator network may change img size

+ # and eventually cause size mismatch error

+ mult = 4

+ assert target_width % mult == 0, "the target width needs to be multiple of %d." % mult

+ if (ow == target_width and oh % mult == 0):

+ return img

+ w = target_width

+ target_height = int(target_width * oh / ow)

+ m = (target_height - 1) // mult

+ h = (m + 1) * mult

+

+ if target_height != h:

+ __print_size_warning(target_width, target_height, w, h)

+

+ return img.resize((w, h), Image.BICUBIC)

+

+

+def __print_size_warning(ow, oh, w, h):

+ if not hasattr(__print_size_warning, 'has_printed'):

+ print("The image size needs to be a multiple of 4. "

+ "The loaded image size was (%d, %d), so it was adjusted to "

+ "(%d, %d). This adjustment will be done to all images "

+ "whose sizes are not multiples of 4" % (ow, oh, w, h))

+ __print_size_warning.has_printed = True

diff --git a/data/base_dataset.pyc b/data/base_dataset.pyc

new file mode 100755

index 00000000..b83ebe95

Binary files /dev/null and b/data/base_dataset.pyc differ

diff --git a/data/image_folder.py b/data/image_folder.py

new file mode 100755

index 00000000..898200b2

--- /dev/null

+++ b/data/image_folder.py

@@ -0,0 +1,68 @@

+###############################################################################

+# Code from

+# https://github.com/pytorch/vision/blob/master/torchvision/datasets/folder.py

+# Modified the original code so that it also loads images from the current

+# directory as well as the subdirectories

+###############################################################################

+

+import torch.utils.data as data

+

+from PIL import Image

+import os

+import os.path

+

+IMG_EXTENSIONS = [

+ '.jpg', '.JPG', '.jpeg', '.JPEG',

+ '.png', '.PNG', '.ppm', '.PPM', '.bmp', '.BMP',

+]

+

+

+def is_image_file(filename):

+ return any(filename.endswith(extension) for extension in IMG_EXTENSIONS)

+

+

+def make_dataset(dir):

+ images = []

+ assert os.path.isdir(dir), '%s is not a valid directory' % dir

+

+ for root, _, fnames in sorted(os.walk(dir)):

+ for fname in fnames:

+ if is_image_file(fname):

+ path = os.path.join(root, fname)

+ images.append(path)

+

+ return images

+

+

+def default_loader(path):

+ return Image.open(path).convert('RGB')

+

+

+class ImageFolder(data.Dataset):

+

+ def __init__(self, root, transform=None, return_paths=False,

+ loader=default_loader):

+ imgs = make_dataset(root)

+ if len(imgs) == 0:

+ raise(RuntimeError("Found 0 images in: " + root + "\n"

+ "Supported image extensions are: " +

+ ",".join(IMG_EXTENSIONS)))

+

+ self.root = root

+ self.imgs = imgs

+ self.transform = transform

+ self.return_paths = return_paths

+ self.loader = loader

+

+ def __getitem__(self, index):

+ path = self.imgs[index]

+ img = self.loader(path)

+ if self.transform is not None:

+ img = self.transform(img)

+ if self.return_paths:

+ return img, path

+ else:

+ return img

+

+ def __len__(self):

+ return len(self.imgs)

diff --git a/data/image_folder.pyc b/data/image_folder.pyc

new file mode 100755

index 00000000..1db66574

Binary files /dev/null and b/data/image_folder.pyc differ

diff --git a/data/single_dataset.py b/data/single_dataset.py

new file mode 100755

index 00000000..c8b76550

--- /dev/null

+++ b/data/single_dataset.py

@@ -0,0 +1,42 @@

+import os.path

+from data.base_dataset import BaseDataset, get_transform

+from data.image_folder import make_dataset

+from PIL import Image

+

+

+class SingleDataset(BaseDataset):

+ @staticmethod

+ def modify_commandline_options(parser, is_train):

+ return parser

+

+ def initialize(self, opt):

+ self.opt = opt

+ self.root = opt.dataroot

+ self.dir_A = os.path.join(opt.dataroot)

+

+ self.A_paths = make_dataset(self.dir_A)

+

+ self.A_paths = sorted(self.A_paths)

+

+ self.transform = get_transform(opt)

+

+ def __getitem__(self, index):

+ A_path = self.A_paths[index]

+ A_img = Image.open(A_path).convert('RGB')

+ A = self.transform(A_img)

+ if self.opt.direction == 'BtoA':

+ input_nc = self.opt.output_nc

+ else:

+ input_nc = self.opt.input_nc

+

+ if input_nc == 1: # RGB to gray

+ tmp = A[0, ...] * 0.299 + A[1, ...] * 0.587 + A[2, ...] * 0.114

+ A = tmp.unsqueeze(0)

+

+ return {'A': A, 'A_paths': A_path}

+

+ def __len__(self):

+ return len(self.A_paths)

+

+ def name(self):

+ return 'SingleImageDataset'

diff --git a/data/unaligned_dataset.py b/data/unaligned_dataset.py

new file mode 100755

index 00000000..de2eec2c

--- /dev/null

+++ b/data/unaligned_dataset.py

@@ -0,0 +1,61 @@

+import os.path

+from data.base_dataset import BaseDataset, get_transform

+from data.image_folder import make_dataset

+from PIL import Image

+import random

+

+

+class UnalignedDataset(BaseDataset):

+ @staticmethod

+ def modify_commandline_options(parser, is_train):

+ return parser

+

+ def initialize(self, opt):

+ self.opt = opt

+ self.root = opt.dataroot

+ self.dir_A = os.path.join(opt.dataroot, opt.phase + 'A')

+ self.dir_B = os.path.join(opt.dataroot, opt.phase + 'B')

+

+ self.A_paths = make_dataset(self.dir_A)

+ self.B_paths = make_dataset(self.dir_B)

+

+ self.A_paths = sorted(self.A_paths)

+ self.B_paths = sorted(self.B_paths)

+ self.A_size = len(self.A_paths)

+ self.B_size = len(self.B_paths)

+ self.transform = get_transform(opt)

+

+ def __getitem__(self, index):

+ A_path = self.A_paths[index % self.A_size]

+ if self.opt.serial_batches:

+ index_B = index % self.B_size

+ else:

+ index_B = random.randint(0, self.B_size - 1)

+ B_path = self.B_paths[index_B]

+ A_img = Image.open(A_path).convert('RGB')

+ B_img = Image.open(B_path).convert('RGB')

+

+ A = self.transform(A_img)

+ B = self.transform(B_img)

+ if self.opt.direction == 'BtoA':

+ input_nc = self.opt.output_nc

+ output_nc = self.opt.input_nc

+ else:

+ input_nc = self.opt.input_nc

+ output_nc = self.opt.output_nc

+

+ if input_nc == 1: # RGB to gray

+ tmp = A[0, ...] * 0.299 + A[1, ...] * 0.587 + A[2, ...] * 0.114

+ A = tmp.unsqueeze(0)

+

+ if output_nc == 1: # RGB to gray

+ tmp = B[0, ...] * 0.299 + B[1, ...] * 0.587 + B[2, ...] * 0.114

+ B = tmp.unsqueeze(0)

+ return {'A': A, 'B': B,

+ 'A_paths': A_path, 'B_paths': B_path}

+

+ def __len__(self):

+ return max(self.A_size, self.B_size)

+

+ def name(self):

+ return 'UnalignedDataset'

diff --git a/data/unaligned_dataset.pyc b/data/unaligned_dataset.pyc

new file mode 100755

index 00000000..28558741

Binary files /dev/null and b/data/unaligned_dataset.pyc differ

diff --git a/datasets/bibtex/cityscapes.tex b/datasets/bibtex/cityscapes.tex

new file mode 100755

index 00000000..a87bdbf5

--- /dev/null

+++ b/datasets/bibtex/cityscapes.tex

@@ -0,0 +1,6 @@

+@inproceedings{Cordts2016Cityscapes,

+title={The Cityscapes Dataset for Semantic Urban Scene Understanding},

+author={Cordts, Marius and Omran, Mohamed and Ramos, Sebastian and Rehfeld, Timo and Enzweiler, Markus and Benenson, Rodrigo and Franke, Uwe and Roth, Stefan and Schiele, Bernt},

+booktitle={Proc. of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

+year={2016}

+}

diff --git a/datasets/bibtex/facades.tex b/datasets/bibtex/facades.tex

new file mode 100755

index 00000000..08b773e1

--- /dev/null

+++ b/datasets/bibtex/facades.tex

@@ -0,0 +1,7 @@

+@INPROCEEDINGS{Tylecek13,

+ author = {Radim Tyle{\v c}ek, Radim {\v S}{\' a}ra},

+ title = {Spatial Pattern Templates for Recognition of Objects with Regular Structure},

+ booktitle = {Proc. GCPR},

+ year = {2013},

+ address = {Saarbrucken, Germany},

+}

diff --git a/datasets/bibtex/handbags.tex b/datasets/bibtex/handbags.tex

new file mode 100755

index 00000000..b79710c7

--- /dev/null

+++ b/datasets/bibtex/handbags.tex

@@ -0,0 +1,13 @@

+@inproceedings{zhu2016generative,

+ title={Generative Visual Manipulation on the Natural Image Manifold},

+ author={Zhu, Jun-Yan and Kr{\"a}henb{\"u}hl, Philipp and Shechtman, Eli and Efros, Alexei A.},

+ booktitle={Proceedings of European Conference on Computer Vision (ECCV)},

+ year={2016}

+}

+

+@InProceedings{xie15hed,

+ author = {"Xie, Saining and Tu, Zhuowen"},

+ Title = {Holistically-Nested Edge Detection},

+ Booktitle = "Proceedings of IEEE International Conference on Computer Vision",

+ Year = {2015},

+}

diff --git a/datasets/bibtex/shoes.tex b/datasets/bibtex/shoes.tex

new file mode 100755

index 00000000..e67e158b

--- /dev/null

+++ b/datasets/bibtex/shoes.tex

@@ -0,0 +1,14 @@

+@InProceedings{fine-grained,

+ author = {A. Yu and K. Grauman},

+ title = {{F}ine-{G}rained {V}isual {C}omparisons with {L}ocal {L}earning},

+ booktitle = {Computer Vision and Pattern Recognition (CVPR)},

+ month = {June},

+ year = {2014}

+}

+

+@InProceedings{xie15hed,

+ author = {"Xie, Saining and Tu, Zhuowen"},

+ Title = {Holistically-Nested Edge Detection},

+ Booktitle = "Proceedings of IEEE International Conference on Computer Vision",

+ Year = {2015},

+}

diff --git a/datasets/bibtex/transattr.tex b/datasets/bibtex/transattr.tex

new file mode 100755

index 00000000..05858499

--- /dev/null

+++ b/datasets/bibtex/transattr.tex

@@ -0,0 +1,8 @@

+@article {Laffont14,

+ title = {Transient Attributes for High-Level Understanding and Editing of Outdoor Scenes},

+ author = {Pierre-Yves Laffont and Zhile Ren and Xiaofeng Tao and Chao Qian and James Hays},

+ journal = {ACM Transactions on Graphics (proceedings of SIGGRAPH)},

+ volume = {33},

+ number = {4},

+ year = {2014}

+}

diff --git a/datasets/combine_A_and_B.py b/datasets/combine_A_and_B.py

new file mode 100755

index 00000000..c69dc567

--- /dev/null

+++ b/datasets/combine_A_and_B.py

@@ -0,0 +1,48 @@

+import os

+import numpy as np

+import cv2

+import argparse

+

+parser = argparse.ArgumentParser('create image pairs')

+parser.add_argument('--fold_A', dest='fold_A', help='input directory for image A', type=str, default='../dataset/50kshoes_edges')

+parser.add_argument('--fold_B', dest='fold_B', help='input directory for image B', type=str, default='../dataset/50kshoes_jpg')

+parser.add_argument('--fold_AB', dest='fold_AB', help='output directory', type=str, default='../dataset/test_AB')

+parser.add_argument('--num_imgs', dest='num_imgs', help='number of images', type=int, default=1000000)

+parser.add_argument('--use_AB', dest='use_AB', help='if true: (0001_A, 0001_B) to (0001_AB)', action='store_true')

+args = parser.parse_args()

+

+for arg in vars(args):

+ print('[%s] = ' % arg, getattr(args, arg))

+

+splits = os.listdir(args.fold_A)

+

+for sp in splits:

+ img_fold_A = os.path.join(args.fold_A, sp)

+ img_fold_B = os.path.join(args.fold_B, sp)

+ img_list = os.listdir(img_fold_A)

+ if args.use_AB:

+ img_list = [img_path for img_path in img_list if '_A.' in img_path]

+

+ num_imgs = min(args.num_imgs, len(img_list))

+ print('split = %s, use %d/%d images' % (sp, num_imgs, len(img_list)))

+ img_fold_AB = os.path.join(args.fold_AB, sp)

+ if not os.path.isdir(img_fold_AB):

+ os.makedirs(img_fold_AB)

+ print('split = %s, number of images = %d' % (sp, num_imgs))

+ for n in range(num_imgs):

+ name_A = img_list[n]

+ path_A = os.path.join(img_fold_A, name_A)

+ if args.use_AB:

+ name_B = name_A.replace('_A.', '_B.')

+ else:

+ name_B = name_A

+ path_B = os.path.join(img_fold_B, name_B)

+ if os.path.isfile(path_A) and os.path.isfile(path_B):

+ name_AB = name_A

+ if args.use_AB:

+ name_AB = name_AB.replace('_A.', '.') # remove _A

+ path_AB = os.path.join(img_fold_AB, name_AB)

+ im_A = cv2.imread(path_A, cv2.CV_LOAD_IMAGE_COLOR)

+ im_B = cv2.imread(path_B, cv2.CV_LOAD_IMAGE_COLOR)

+ im_AB = np.concatenate([im_A, im_B], 1)

+ cv2.imwrite(path_AB, im_AB)

diff --git a/datasets/download_cyclegan_dataset.sh b/datasets/download_cyclegan_dataset.sh

new file mode 100755

index 00000000..bfa64141

--- /dev/null

+++ b/datasets/download_cyclegan_dataset.sh

@@ -0,0 +1,15 @@

+FILE=$1

+

+if [[ $FILE != "ae_photos" && $FILE != "apple2orange" && $FILE != "summer2winter_yosemite" && $FILE != "horse2zebra" && $FILE != "monet2photo" && $FILE != "cezanne2photo" && $FILE != "ukiyoe2photo" && $FILE != "vangogh2photo" && $FILE != "maps" && $FILE != "cityscapes" && $FILE != "facades" && $FILE != "iphone2dslr_flower" && $FILE != "ae_photos" && $FILE != "mini" && $FILE != "mini_pix2pix" ]]; then

+ echo "Available datasets are: apple2orange, summer2winter_yosemite, horse2zebra, monet2photo, cezanne2photo, ukiyoe2photo, vangogh2photo, maps, cityscapes, facades, iphone2dslr_flower, ae_photos"

+ exit 1

+fi

+

+echo "Specified [$FILE]"

+URL=https://people.eecs.berkeley.edu/~taesung_park/CycleGAN/datasets/$FILE.zip

+ZIP_FILE=./datasets/$FILE.zip

+TARGET_DIR=./datasets/$FILE/

+wget -N $URL -O $ZIP_FILE

+mkdir $TARGET_DIR

+unzip $ZIP_FILE -d ./datasets/

+rm $ZIP_FILE

diff --git a/datasets/download_pix2pix_dataset.sh b/datasets/download_pix2pix_dataset.sh

new file mode 100755

index 00000000..e4987227

--- /dev/null

+++ b/datasets/download_pix2pix_dataset.sh

@@ -0,0 +1,16 @@

+FILE=$1

+

+if [[ $FILE != "cityscapes" && $FILE != "night2day" && $FILE != "edges2handbags" && $FILE != "edges2shoes" && $FILE != "facades" && $FILE != "maps" ]]; then

+ echo "Available datasets are cityscapes, night2day, edges2handbags, edges2shoes, facades, maps"

+ exit 1

+fi

+

+echo "Specified [$FILE]"

+

+URL=http://efrosgans.eecs.berkeley.edu/pix2pix/datasets/$FILE.tar.gz

+TAR_FILE=./datasets/$FILE.tar.gz

+TARGET_DIR=./datasets/$FILE/

+wget -N $URL -O $TAR_FILE

+mkdir -p $TARGET_DIR

+tar -zxvf $TAR_FILE -C ./datasets/

+rm $TAR_FILE

diff --git a/datasets/make_dataset_aligned.py b/datasets/make_dataset_aligned.py

new file mode 100755

index 00000000..739c7679

--- /dev/null

+++ b/datasets/make_dataset_aligned.py

@@ -0,0 +1,63 @@

+import os

+

+from PIL import Image

+

+

+def get_file_paths(folder):

+ image_file_paths = []

+ for root, dirs, filenames in os.walk(folder):

+ filenames = sorted(filenames)

+ for filename in filenames:

+ input_path = os.path.abspath(root)

+ file_path = os.path.join(input_path, filename)

+ if filename.endswith('.png') or filename.endswith('.jpg'):

+ image_file_paths.append(file_path)

+

+ break # prevent descending into subfolders

+ return image_file_paths

+

+

+def align_images(a_file_paths, b_file_paths, target_path):

+ if not os.path.exists(target_path):

+ os.makedirs(target_path)

+

+ for i in range(len(a_file_paths)):

+ img_a = Image.open(a_file_paths[i])

+ img_b = Image.open(b_file_paths[i])

+ assert(img_a.size == img_b.size)

+

+ aligned_image = Image.new("RGB", (img_a.size[0] * 2, img_a.size[1]))

+ aligned_image.paste(img_a, (0, 0))

+ aligned_image.paste(img_b, (img_a.size[0], 0))

+ aligned_image.save(os.path.join(target_path, '{:04d}.jpg'.format(i)))

+

+

+if __name__ == '__main__':

+ import argparse

+ parser = argparse.ArgumentParser()

+ parser.add_argument(

+ '--dataset-path',

+ dest='dataset_path',

+ help='Which folder to process (it should have subfolders testA, testB, trainA and trainB'

+ )

+ args = parser.parse_args()

+

+ dataset_folder = args.dataset_path

+ print(dataset_folder)

+

+ test_a_path = os.path.join(dataset_folder, 'testA')

+ test_b_path = os.path.join(dataset_folder, 'testB')

+ test_a_file_paths = get_file_paths(test_a_path)

+ test_b_file_paths = get_file_paths(test_b_path)

+ assert(len(test_a_file_paths) == len(test_b_file_paths))

+ test_path = os.path.join(dataset_folder, 'test')

+

+ train_a_path = os.path.join(dataset_folder, 'trainA')

+ train_b_path = os.path.join(dataset_folder, 'trainB')

+ train_a_file_paths = get_file_paths(train_a_path)

+ train_b_file_paths = get_file_paths(train_b_path)

+ assert(len(train_a_file_paths) == len(train_b_file_paths))

+ train_path = os.path.join(dataset_folder, 'train')

+

+ align_images(test_a_file_paths, test_b_file_paths, test_path)

+ align_images(train_a_file_paths, train_b_file_paths, train_path)

diff --git a/docs/datasets.md b/docs/datasets.md

new file mode 100755

index 00000000..42e88a40

--- /dev/null

+++ b/docs/datasets.md

@@ -0,0 +1,44 @@

+

+

+### CycleGAN Datasets

+Download the CycleGAN datasets using the following script. Some of the datasets are collected by other researchers. Please cite their papers if you use the data.

+```bash

+bash ./datasets/download_cyclegan_dataset.sh dataset_name

+```

+- `facades`: 400 images from the [CMP Facades dataset](http://cmp.felk.cvut.cz/~tylecr1/facade). [[Citation](datasets/bibtex/facades.tex)]

+- `cityscapes`: 2975 images from the [Cityscapes training set](https://www.cityscapes-dataset.com). [[Citation](datasets/bibtex/cityscapes.tex)]

+- `maps`: 1096 training images scraped from Google Maps.

+- `horse2zebra`: 939 horse images and 1177 zebra images downloaded from [ImageNet](http://www.image-net.org) using keywords `wild horse` and `zebra`

+- `apple2orange`: 996 apple images and 1020 orange images downloaded from [ImageNet](http://www.image-net.org) using keywords `apple` and `navel orange`.

+- `summer2winter_yosemite`: 1273 summer Yosemite images and 854 winter Yosemite images were downloaded using Flickr API. See more details in our paper.

+- `monet2photo`, `vangogh2photo`, `ukiyoe2photo`, `cezanne2photo`: The art images were downloaded from [Wikiart](https://www.wikiart.org/). The real photos are downloaded from Flickr using the combination of the tags *landscape* and *landscapephotography*. The training set size of each class is Monet:1074, Cezanne:584, Van Gogh:401, Ukiyo-e:1433, Photographs:6853.

+- `iphone2dslr_flower`: both classes of images were downlaoded from Flickr. The training set size of each class is iPhone:1813, DSLR:3316. See more details in our paper.

+

+To train a model on your own datasets, you need to create a data folder with two subdirectories `trainA` and `trainB` that contain images from domain A and B. You can test your model on your training set by setting `--phase train` in `test.py`. You can also create subdirectories `testA` and `testB` if you have test data.

+

+You should **not** expect our method to work on just any random combination of input and output datasets (e.g. `cats<->keyboards`). From our experiments, we find it works better if two datasets share similar visual content. For example, `landscape painting<->landscape photographs` works much better than `portrait painting <-> landscape photographs`. `zebras<->horses` achieves compelling results while `cats<->dogs` completely fails.

+

+### pix2pix datasets

+Download the pix2pix datasets using the following script. Some of the datasets are collected by other researchers. Please cite their papers if you use the data.

+```bash

+bash ./datasets/download_pix2pix_dataset.sh dataset_name

+```

+- `facades`: 400 images from [CMP Facades dataset](http://cmp.felk.cvut.cz/~tylecr1/facade). [[Citation](datasets/bibtex/facades.tex)]

+- `cityscapes`: 2975 images from the [Cityscapes training set](https://www.cityscapes-dataset.com). [[Citation](datasets/bibtex/cityscapes.tex)]

+- `maps`: 1096 training images scraped from Google Maps

+- `edges2shoes`: 50k training images from [UT Zappos50K dataset](http://vision.cs.utexas.edu/projects/finegrained/utzap50k). Edges are computed by [HED](https://github.com/s9xie/hed) edge detector + post-processing. [[Citation](datasets/bibtex/shoes.tex)]

+- `edges2handbags`: 137K Amazon Handbag images from [iGAN project](https://github.com/junyanz/iGAN). Edges are computed by [HED](https://github.com/s9xie/hed) edge detector + post-processing. [[Citation](datasets/bibtex/handbags.tex)]

+- `night2day`: around 20K natural scene images from [Transient Attributes dataset](http://transattr.cs.brown.edu/) [[Citation](datasets/bibtex/transattr.tex)]. To train a `day2night` pix2pix model, you need to add `--direction BtoA`.

+

+We provide a python script to generate pix2pix training data in the form of pairs of images {A,B}, where A and B are two different depictions of the same underlying scene. For example, these might be pairs {label map, photo} or {bw image, color image}. Then we can learn to translate A to B or B to A:

+

+Create folder `/path/to/data` with subfolders `A` and `B`. `A` and `B` should each have their own subfolders `train`, `val`, `test`, etc. In `/path/to/data/A/train`, put training images in style A. In `/path/to/data/B/train`, put the corresponding images in style B. Repeat same for other data splits (`val`, `test`, etc).

+

+Corresponding images in a pair {A,B} must be the same size and have the same filename, e.g., `/path/to/data/A/train/1.jpg` is considered to correspond to `/path/to/data/B/train/1.jpg`.

+

+Once the data is formatted this way, call:

+```bash

+python datasets/combine_A_and_B.py --fold_A /path/to/data/A --fold_B /path/to/data/B --fold_AB /path/to/data

+```

+

+This will combine each pair of images (A,B) into a single image file, ready for training.

diff --git a/docs/qa.md b/docs/qa.md

new file mode 100755

index 00000000..6501ccb9

--- /dev/null

+++ b/docs/qa.md

@@ -0,0 +1,107 @@

+## Frequently Asked Questions

+Before you post a new question, please first look at the following Q & A and existing GitHub issues. You may also want to read [Training/Test tips](docs/tips.md) for more suggestions.

+

+#### Connection Error:HTTPConnectionPool ([#230](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/230), [#24](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/24), [#38](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/38))

+Similar error messages include “Failed to establish a new connection/Connection refused”.

+

+Please start the visdom server before starting the training:

+```bash

+python -m visdom.server

+```

+To install the visdom, you can use the following command:

+```bash

+pip install visdom

+```

+You can also disable the visdom by setting `--display_id 0`.

+

+#### My PyTorch errors on CUDA related code.

+Try to run the following code snippet to make sure that CUDA is working (assuming using PyTorch >= 0.4):

+```python

+import torch

+torch.cuda.init()

+print(torch.randn(1, device='cuda')

+```

+

+If you met an error, it is likely that your PyTorch build does not work with CUDA, e.g., it is installl from the official MacOS binary, or you have a GPU that is too old and not supported anymore. You may run the the code with CPU using `--device_ids -1`.

+

+#### TypeError: Object of type 'Tensor' is not JSON serializable ([#258](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/258))

+Similar errors: AttributeError: module 'torch' has no attribute 'device' ([#314](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/314))

+

+The current code only works with PyTorch 0.4+. An earlier PyTorch version can often cause the above errors.

+

+#### ValueError: empty range for randrange() ([#390](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/390), [#376](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/376), [#194](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/194))

+Similar error messages include "ConnectionRefusedError: [Errno 111] Connection refused"

+

+It is related to data augmentation step. It often happens when you use `--resize_or_crop crop`. The program will crop random `fineSize x fineSize` patches out of the input training images. But if some of your image sizes (e.g., `256x384`) are smaller than the `fineSize` (e.g., 512), you will get this error. A simple fix will be to use other data augmentation methods such as `--resize_and_crop` or `scale_width_and_crop`. Our program will automatically resize the images according to `loadSize` before apply `fineSize x fineSize` cropping. Make sure that `loadSize >= fineSize`.

+

+

+#### Can I continue/resume my training? ([#350](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/350), [#275](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/275), [#234](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/234), [#87](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/87))

+You can use the option `--continue_train`. Also set `--epoch_count` to specify a different starting epoch count. See more discussion in [training/test tips](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/blob/master/docs/tips.md#trainingtest-tips.

+

+#### Why does my training loss not converge? ([#335](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/335), [#164](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/164), [#30](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/30))

+Many GAN losses do not converge (exception: WGAN, WGAN-GP, etc. ) due to the nature of minimax optimization. For DCGAN and LSGAN objective, it is quite normal for the G and D losses to go up and down. It should be fine as long as they do not blow up.

+

+#### How can I make it work for my own data (e.g., 16-bit png, tiff, hyperspectral images)? ([#309](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/309), [#320](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/), [#202](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/202))

+The current code only supports RGB and grayscale images. If you would like to train the model on other data types, please follow the following steps:

+

+- change the parameters `--input_nc` and `--output_nc` to the number of channels in your input/output images.

+- Write your own custom data loader (It is easy as long as you know how to load your data with python). If you write a new data loader class, you need to change the flag `--dataset_mode` accordingly. Alternatively, you can modify the existing data loader. For aligned datasets, change this [line](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/blob/master/data/aligned_dataset.py#L24); For unaligned datasets, change these two [lines](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/blob/master/data/unaligned_dataset.py#L36).

+

+- If you use visdom and HTML to visualize the results, you may also need to change the visualization code.

+

+#### Multi-GPU Training ([#327](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/327), [#292](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/292), [#137](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/137), [#35](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/35))

+You can use Multi-GPU training by setting `--gpu_ids` (e.g., `--gpu_ids 0,1,2,3` for the first four GPUs on your machine.) To fully utilize all the GPUs, you need to increase your batch size. Try `--batch_size 4`, `--batch_size 16`, or even a larger batch_size. Each GPU will process batch_size/#GPUs images. The optimal batch size depends on the number of GPUs you have, GPU memory per GPU, and the resolution of your training images.

+

+We also recommend that you use the instance normalization for multi-GPU training by setting `--norm instance`. The current batch normalization might not work for multi-GPUs as the batchnorm parameters are not shared across different GPUs. Advanced users can try [synchronized batchnorm](https://github.com/vacancy/Synchronized-BatchNorm-PyTorch).

+

+

+#### Can I run the model on CPU? ([#310](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/310))

+Yes, you can set `--gpu_ids -1`. See [training/test tips](docs/tips.md) for more details.

+

+

+#### Are pre-trained models available? ([#10](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/10))

+Yes, you can download pretrained models with the bash script `./scripts/download_cyclegan_model.sh`. See [here](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix#apply-a-pre-trained-model-cyclegan) for more details. We are slowly adding more models to the repo.

+

+#### Out of memory ([#174](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/174))

+CycleGAN is more memory-intensive than pix2pix as it requires two generators and two discriminators. If you would like to produce high-resolution images, you can do the following.

+

+- During training, train CycleGAN on cropped images of the training set. Please be careful not to change the aspect ratio or the scale of the original image, as this can lead to the training/test gap. You can usually do this by using `--resize_or_crop crop` option, or `--resize_or_crop scale_width_and_crop`.

+

+- Then at test time, you can load only one generator to produce the results in a single direction. This greatly saves GPU memory as you are not loading the discriminators and the other generator in the opposite direction. You can probably take the whole image as input. You can do this using `--model test --dataroot [path to the directory that contains your test images (e.g., ./datasets/horse2zebra/trainA)] --model_suffix _A --resize_or_crop none`. You can use either `--resize_or_crop none` or `--resize_or_crop scale_width --fineSize [your_desired_image_width]`. Please see the [model_suffix](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/blob/master/models/test_model.py#L16) and [resize_or_crop](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/blob/master/data/base_dataset.py#L24) for more details.

+

+#### What is the identity loss? ([#322](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/322), [#373](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/373), [#362](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/pull/362))

+We use the identity loss for our photo to painting application. The identity loss can regularize the generator to be close to an identity mapping when fed with real samples from the *target* domain. If something already looks like from the target domain, you should preserve the image without making additional changes. The generator trained with this loss will often be more conservative for unknown content. Please see more details in Sec 5.2 ''Photo generation from paintings'' and Figure 12 in the CycleGAN [paper](https://arxiv.org/pdf/1703.10593.pdf). The loss was first proposed in the Equation 6 of the prior work [[Taigman et al., 2017]](https://arxiv.org/pdf/1611.02200.pdf).

+

+#### The color gets inverted from the beginning of training ([#249](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/249))

+The authors also observe that the generator unnecessarily inverts the color of the input image early in training, and then never learns to undo the inversion. In this case, you can try two things.

+

+- First, try using identity loss `--identity 1.0` or `--identity 0.1`. We observe that the identity loss makes the generator to be more conservative and make fewer unnecessary changes. However, because of this, the change may not be as dramatic.

+

+- Second, try smaller variance when initializing weights by changing `--init_gain`. We observe that smaller variance in weight initialization results in less color inversion.

+

+#### For labels2photo Cityscapes evaluation, why does the pretrained FCN-8s model not work well on the original Cityscapes input images? ([#150](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/150))

+The model was trained on 256x256 images that are resized/upsampled to 1024x2048, so expected input images to the network are very blurry. The purpose of the resizing was to 1) keep the label maps in the original high resolution untouched and 2) avoid the need of changing the standard FCN training code for Cityscapes.

+

+#### How do I get the `ground-truth` numbers on the labels2photo Cityscapes evaluation? ([#150](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/150))

+You need to resize the original Cityscapes images to 256x256 before running the evaluation code.

+

+

+#### Using resize-conv to reduce checkerboard artifacts ([#190](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/190), [#64](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/64))

+This Distill [blog](https://distill.pub/2016/deconv-checkerboard/) discussed one of the potential causes of the checkerboard artifacts. You can fix that issue by switching from "deconvolution" to nearest-neighbor upsampling followed by regular convolution. Here is one implementation provided by [@SsnL](https://github.com/SsnL). You can replace the ConvTranspose2d with the following layers.

+```python

+nn.Upsample(scale_factor = 2, mode='bilinear'),

+nn.ReflectionPad2d(1),

+nn.Conv2d(ngf * mult, int(ngf * mult / 2), kernel_size=3, stride=1, padding=0),

+```

+We have also noticed that sometimes the checkboard artifacts will go away if you train long enough. Maybe you can try training your model a bit longer.

+

+#### pix2pix/CycleGAN has no random noise z ([#152](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/152))

+The current pix2pix/CycleGAN model does not take z as input. In both pix2pix and CycleGAN, we tried to add z to the generator: e.g., adding z to a latent state, concatenating with a latent state, applying dropout, etc., but often found the output did not vary significantly as a function of z. Conditional GANs do not need noise as long as the input is sufficiently complex so that the input can kind of play the role of noise. Without noise, the mapping is deterministic.

+

+Please check out the following papers that show ways of getting z to actually have a substantial effect: e.g., [BicycleGAN](https://github.com/junyanz/BicycleGAN), [AugmentedCycleGAN](https://arxiv.org/abs/1802.10151), [MUNIT](https://arxiv.org/abs/1804.04732), [DRIT](https://arxiv.org/pdf/1808.00948.pdf), etc.

+

+#### Experiment details (e.g., BW->color) ([#306](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix/issues/306))

+You can find more training details and hyperparameter settings in the appendix of [CycleGAN](https://arxiv.org/abs/1703.10593) and [pix2pix](https://arxiv.org/abs/1611.07004) papers.

+

+#### Results with [Cycada](https://arxiv.org/pdf/1711.03213.pdf)

+We generated the [result of translating GTA images to Cityscapes-style images](https://junyanz.github.io/CycleGAN/) using our Torch repo. Our PyTorch and Torch implementation seemed to produce a little bit different results, although we have not measured the FCN score using the pytorch-trained model. To reproduce the result of Cycada, please use the Torch repo for now.

diff --git a/docs/tips.md b/docs/tips.md

new file mode 100755

index 00000000..5d827a10

--- /dev/null

+++ b/docs/tips.md

@@ -0,0 +1,26 @@

+## Training/test Tips

+#### Training/test options

+Please see `options/train_options.py` and `options/base_options.py` for the training flags; see `options/test_options.py` and `options/base_options.py` for the test flags. There are some model-specific flags as well, which are added in the model files, such as `--lambda_A` option in `model/cycle_gan_model.py`. The default values of these options are also adjusted in the model files.

+#### CPU/GPU (default `--gpu_ids 0`)

+Please set`--gpu_ids -1` to use CPU mode; set `--gpu_ids 0,1,2` for multi-GPU mode. You need a large batch size (e.g. `--batch_size 32`) to benefit from multiple GPUs.

+

+#### Visualization

+During training, the current results can be viewed using two methods. First, if you set `--display_id` > 0, the results and loss plot will appear on a local graphics web server launched by [visdom](https://github.com/facebookresearch/visdom). To do this, you should have `visdom` installed and a server running by the command `python -m visdom.server`. The default server URL is `http://localhost:8097`. `display_id` corresponds to the window ID that is displayed on the `visdom` server. The `visdom` display functionality is turned on by default. To avoid the extra overhead of communicating with `visdom` set `--display_id -1`. Second, the intermediate results are saved to `[opt.checkpoints_dir]/[opt.name]/web/` as an HTML file. To avoid this, set `--no_html`.

+

+#### Preprocessing

+ Images can be resized and cropped in different ways using `--resize_or_crop` option. The default option `'resize_and_crop'` resizes the image to be of size `(opt.loadSize, opt.loadSize)` and does a random crop of size `(opt.fineSize, opt.fineSize)`. `'crop'` skips the resizing step and only performs random cropping. `'scale_width'` resizes the image to have width `opt.fineSize` while keeping the aspect ratio. `'scale_width_and_crop'` first resizes the image to have width `opt.loadSize` and then does random cropping of size `(opt.fineSize, opt.fineSize)`. `'none'` tries to skip all these preprocessing steps. However, if the image size is not a multiple of some number depending on the number of downsamplings of the generator, you will get an error because the size of the output image may be different from the size of the input image. Therefore, `'none'` option still tries to adjust the image size to be a multiple of 4. You might need a bigger adjustment if you change the generator architecture. Please see `data/base_datset.py` do see how all these were implemented.

+

+#### Fine-tuning/resume training

+To fine-tune a pre-trained model, or resume the previous training, use the `--continue_train` flag. The program will then load the model based on `epoch`. By default, the program will initialize the epoch count as 1. Set `--epoch_count ` to specify a different starting epoch count.

+

+#### About image size

+ Since the generator architecture in CycleGAN involves a series of downsampling / upsampling operations, the size of the input and output image may not match if the input image size is not a multiple of 4. As a result, you may get a runtime error because the L1 identity loss cannot be enforced with images of different size. Therefore, we slightly resize the image to become multiples of 4 even with `--resize_or_crop none` option. For the same reason, `--fineSize` needs to be a multiple of 4.

+

+#### Training/Testing with high res images

+CycleGAN is quite memory-intensive as four networks (two generators and two discriminators) need to be loaded on one GPU, so a large image cannot be entirely loaded. In this case, we recommend training with cropped images. For example, to generate 1024px results, you can train with `--resize_or_crop scale_width_and_crop --loadSize 1024 --fineSize 360`, and test with `--resize_or_crop scale_width --fineSize 1024`. This way makes sure the training and test will be at the same scale. At test time, you can afford higher resolution because you don’t need to load all networks.

+

+#### About loss curve

+Unfortunately, the loss curve does not reveal much information in training GANs, and CycleGAN is no exception. To check whether the training has converged or not, we recommend periodically generating a few samples and looking at them.

+

+#### About batch size

+For all experiments in the paper, we set the batch size to be 1. If there is room for memory, you can use higher batch size with batch norm or instance norm. (Note that the default batchnorm does not work well with multi-GPU training. You may consider using [synchronized batchnorm](https://github.com/vacancy/Synchronized-BatchNorm-PyTorch) instead). But please be aware that it can impact the training. In particular, even with Instance Normalization, different batch sizes can lead to different results. Moreover, increasing `--fineSize` may be a good alternative to increasing the batch size.

diff --git a/environment.yml b/environment.yml

new file mode 100755

index 00000000..f7f382a6

--- /dev/null

+++ b/environment.yml

@@ -0,0 +1,14 @@

+name: pytorch-CycleGAN-and-pix2pix

+channels:

+- peterjc123

+- defaults

+dependencies:

+- python=3.5.5

+- pytorch=0.4

+- scipy

+- pip:

+ - dominate==2.3.1

+ - git+https://github.com/pytorch/vision.git

+ - Pillow==5.0.0

+ - numpy==1.14.1

+ - visdom==0.1.7

diff --git a/models/__init__.py b/models/__init__.py

new file mode 100755

index 00000000..4d920917

--- /dev/null

+++ b/models/__init__.py

@@ -0,0 +1,39 @@

+import importlib

+from models.base_model import BaseModel

+

+

+def find_model_using_name(model_name):

+ # Given the option --model [modelname],

+ # the file "models/modelname_model.py"

+ # will be imported.

+ model_filename = "models." + model_name + "_model"

+ modellib = importlib.import_module(model_filename)

+

+ # In the file, the class called ModelNameModel() will

+ # be instantiated. It has to be a subclass of BaseModel,

+ # and it is case-insensitive.

+ model = None

+ target_model_name = model_name.replace('_', '') + 'model'

+ for name, cls in modellib.__dict__.items():

+ if name.lower() == target_model_name.lower() \

+ and issubclass(cls, BaseModel):

+ model = cls

+

+ if model is None:

+ print("In %s.py, there should be a subclass of BaseModel with class name that matches %s in lowercase." % (model_filename, target_model_name))

+ exit(0)

+

+ return model

+

+

+def get_option_setter(model_name):

+ model_class = find_model_using_name(model_name)

+ return model_class.modify_commandline_options

+

+

+def create_model(opt):

+ model = find_model_using_name(opt.model)

+ instance = model()

+ instance.initialize(opt)

+ print("model [%s] was created" % (instance.name()))

+ return instance

diff --git a/models/__init__.pyc b/models/__init__.pyc

new file mode 100755

index 00000000..b7344327

Binary files /dev/null and b/models/__init__.pyc differ

diff --git a/models/base_model.py b/models/base_model.py

new file mode 100755

index 00000000..b98ea27d

--- /dev/null

+++ b/models/base_model.py

@@ -0,0 +1,159 @@

+import os

+import torch

+from collections import OrderedDict

+from . import networks

+

+

+class BaseModel():

+

+ # modify parser to add command line options,

+ # and also change the default values if needed

+ @staticmethod

+ def modify_commandline_options(parser, is_train):

+ return parser

+

+ def name(self):

+ return 'BaseModel'

+

+ def initialize(self, opt):

+ self.opt = opt

+ self.gpu_ids = opt.gpu_ids

+ self.isTrain = opt.isTrain

+ self.device = torch.device('cuda:{}'.format(self.gpu_ids[0])) if self.gpu_ids else torch.device('cpu')

+ self.save_dir = os.path.join(opt.checkpoints_dir, opt.name)

+ if opt.resize_or_crop != 'scale_width':

+ torch.backends.cudnn.benchmark = True

+ self.loss_names = []

+ self.model_names = []

+ self.visual_names = []

+ self.image_paths = []

+

+ def set_input(self, input):

+ self.input = input

+

+ def forward(self):

+ pass

+

+ # load and print networks; create schedulers

+ def setup(self, opt, parser=None):

+ if self.isTrain:

+ self.schedulers = [networks.get_scheduler(optimizer, opt) for optimizer in self.optimizers]

+

+ if not self.isTrain or opt.continue_train:

+ self.load_networks(opt.epoch)

+ self.print_networks(opt.verbose)

+

+ # make models eval mode during test time

+ def eval(self):

+ for name in self.model_names:

+ if isinstance(name, str):

+ net = getattr(self, 'net' + name)

+ net.eval()

+

+ # used in test time, wrapping `forward` in no_grad() so we don't save

+ # intermediate steps for backprop

+ def test(self):

+ with torch.no_grad():

+ self.forward()

+

+ # get image paths

+ def get_image_paths(self):

+ return self.image_paths

+

+ def optimize_parameters(self):

+ pass

+

+ # update learning rate (called once every epoch)

+ def update_learning_rate(self):

+ for scheduler in self.schedulers:

+ scheduler.step()

+ lr = self.optimizers[0].param_groups[0]['lr']

+ print('learning rate = %.7f' % lr)

+

+ # return visualization images. train.py will display these images, and save the images to a html

+ def get_current_visuals(self):

+ visual_ret = OrderedDict()

+ for name in self.visual_names:

+ if isinstance(name, str):

+ visual_ret[name] = getattr(self, name)

+ return visual_ret

+

+ # return traning losses/errors. train.py will print out these errors as debugging information

+ def get_current_losses(self):

+ errors_ret = OrderedDict()

+ for name in self.loss_names:

+ if isinstance(name, str):

+ # float(...) works for both scalar tensor and float number

+ errors_ret[name] = float(getattr(self, 'loss_' + name))

+ return errors_ret

+

+ # save models to the disk

+ def save_networks(self, epoch):

+ for name in self.model_names:

+ if isinstance(name, str):

+ save_filename = '%s_net_%s.pth' % (epoch, name)

+ save_path = os.path.join(self.save_dir, save_filename)

+ net = getattr(self, 'net' + name)

+

+ if len(self.gpu_ids) > 0 and torch.cuda.is_available():

+ torch.save(net.module.cpu().state_dict(), save_path)

+ net.cuda(self.gpu_ids[0])

+ else:

+ torch.save(net.cpu().state_dict(), save_path)

+

+ def __patch_instance_norm_state_dict(self, state_dict, module, keys, i=0):

+ key = keys[i]

+ if i + 1 == len(keys): # at the end, pointing to a parameter/buffer

+ if module.__class__.__name__.startswith('InstanceNorm') and \

+ (key == 'running_mean' or key == 'running_var'):

+ if getattr(module, key) is None:

+ state_dict.pop('.'.join(keys))

+ if module.__class__.__name__.startswith('InstanceNorm') and \

+ (key == 'num_batches_tracked'):

+ state_dict.pop('.'.join(keys))

+ else:

+ self.__patch_instance_norm_state_dict(state_dict, getattr(module, key), keys, i + 1)

+

+ # load models from the disk

+ def load_networks(self, epoch):

+ for name in self.model_names:

+ if isinstance(name, str):

+ load_filename = '%s_net_%s.pth' % (epoch, name)

+ load_path = os.path.join(self.save_dir, load_filename)

+ net = getattr(self, 'net' + name)

+ if isinstance(net, torch.nn.DataParallel):

+ net = net.module

+ print('loading the model from %s' % load_path)

+ # if you are using PyTorch newer than 0.4 (e.g., built from

+ # GitHub source), you can remove str() on self.device

+ state_dict = torch.load(load_path, map_location=str(self.device))

+ if hasattr(state_dict, '_metadata'):

+ del state_dict._metadata

+

+ # patch InstanceNorm checkpoints prior to 0.4

+ for key in list(state_dict.keys()): # need to copy keys here because we mutate in loop

+ self.__patch_instance_norm_state_dict(state_dict, net, key.split('.'))

+ net.load_state_dict(state_dict)

+

+ # print network information

+ def print_networks(self, verbose):

+ print('---------- Networks initialized -------------')

+ for name in self.model_names:

+ if isinstance(name, str):

+ net = getattr(self, 'net' + name)

+ num_params = 0

+ for param in net.parameters():

+ num_params += param.numel()

+ if verbose:

+ print(net)

+ print('[Network %s] Total number of parameters : %.3f M' % (name, num_params / 1e6))

+ print('-----------------------------------------------')

+

+ # set requies_grad=Fasle to avoid computation

+ def set_requires_grad(self, nets, requires_grad=False):

+ if not isinstance(nets, list):

+ nets = [nets]

+ for net in nets:

+ if net is not None:

+ for param in net.parameters():

+ param.requires_grad = requires_grad

diff --git a/models/base_model.pyc b/models/base_model.pyc

new file mode 100755

index 00000000..3fee66b1

Binary files /dev/null and b/models/base_model.pyc differ

diff --git a/models/cycle_gan_model.py b/models/cycle_gan_model.py

new file mode 100755

index 00000000..825f90d0

--- /dev/null

+++ b/models/cycle_gan_model.py

@@ -0,0 +1,149 @@

+import torch

+import itertools

+from util.image_pool import ImagePool

+from .base_model import BaseModel

+from . import networks

+

+

+class CycleGANModel(BaseModel):

+ def name(self):

+ return 'CycleGANModel'

+

+ @staticmethod

+ def modify_commandline_options(parser, is_train=True):

+ # default CycleGAN did not use dropout

+ parser.set_defaults(no_dropout=True)

+ if is_train:

+ parser.add_argument('--lambda_A', type=float, default=10.0, help='weight for cycle loss (A -> B -> A)')

+ parser.add_argument('--lambda_B', type=float, default=10.0,

+ help='weight for cycle loss (B -> A -> B)')