- Project was born as a proof of concept. The Core service is provided as an API that will analyze some text and perform frequency/length based queries. (e.g. List top 50 words from submitted text with length between 10 and 50).

- The backend is written in C# with ASP.NET, then containerized using Docker (and deployed at Heroku). It provides the core service along with utilities to preprocess and serialize/deserialize the data structures summarizing the text internal frequency structure.

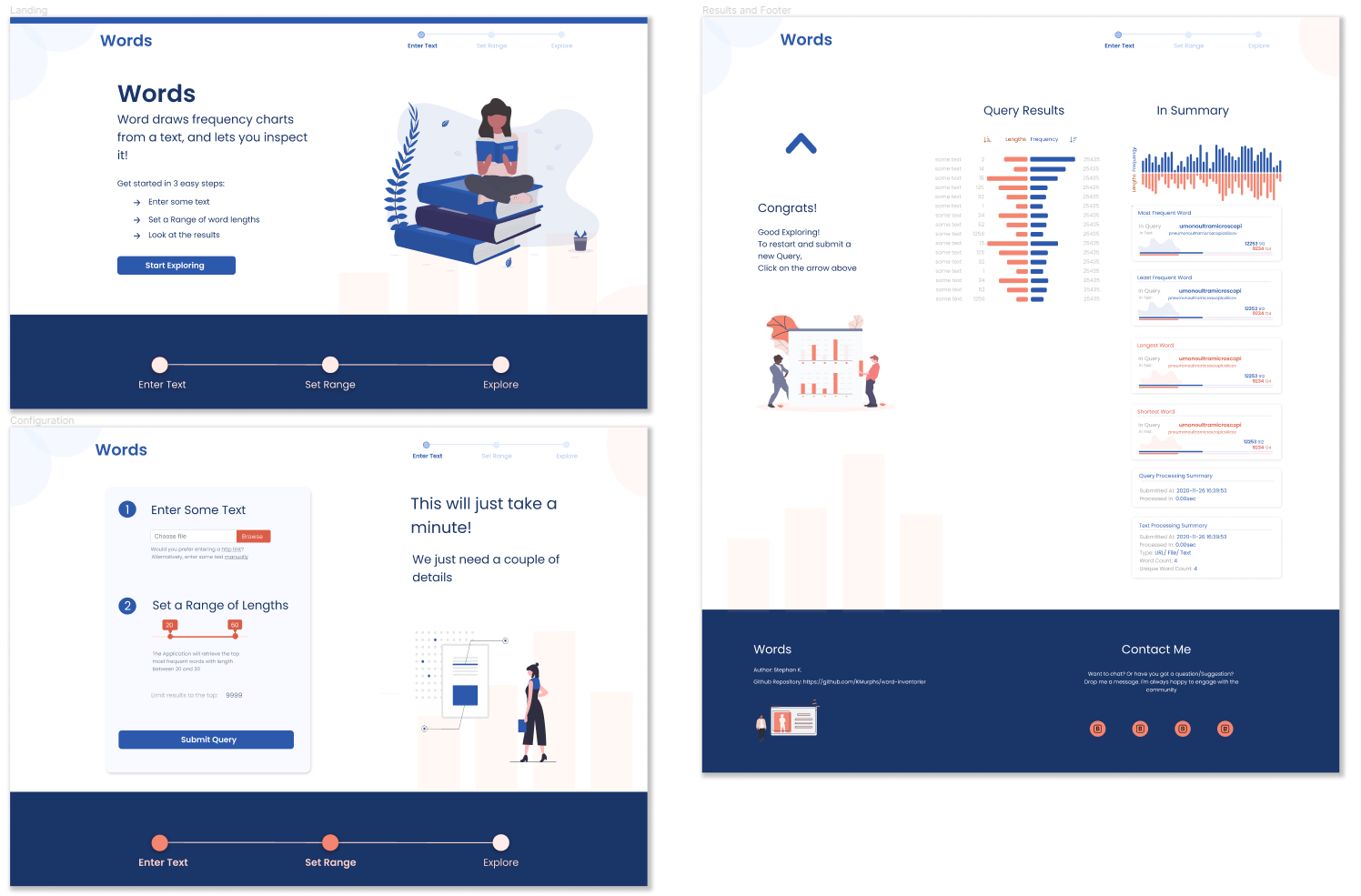

- To illustrate usage, a front client was designed using Figma, and implemented using ReactJS, Typescript and Tailwindcss

- The front end application is served by the backend but interact with the core service using the API presented above.

Project used to build an inventory of words from a body of text while keeping track of some statistics like frequency and length. As the sole developer, I conceived, designed, implemented and documented the solution.

Access the application live at: https://corpus-inventory.herokuapp.com/

Duration: 2 weeks

Tools, Technologies, Skills: NUnit Testing Framework, ASP.NET, REST API, Docker, Heroku, Figma, React, Typescript, Tailwindcss

Languages: C#, Batch Files, Javascript/Typescript

Initially, the concept was born out of a discussion with a friend. I had some free time on my hand and was wondering the best method to quickly analyze a huge amount of text and perform queries such as retrieving the top 100 most frequent words with lengths between 10 and 50 for example.

-

The initial question was how to retrieve the top (50) most frequent in a body of text. There are many solution to a problem like this:

- The initial inclination was to use a dictionary where each word would become a key associated to the number of times that word occured.

Then an extra requirement was added to make the problem a little bit more interesting:- The algorithm should be able to look at words with lengths in a certain range (say between 10 and 20).

The initial algorithm would still inventory the whole text in

O(N), where N is the number of words in the text. The dictionary would call for a space complexity ofO(M), where M is the number of unique words in the text. -

From thereon, to retrieve the top 50, a sorting algorithm could be used and the solution would take

O(MlogM).

A modified bubble sort could bring down the time complexity toO(kM)by only considering the top K most frequent words - As long as, K is way smaller than logM (K << logM), the modified bubble sort is a better alternative.

On top of this, this modified bubble would handle the length requirement of the query without trouble at all. -

Another requirement was to be able to reuse the processing of this huge text to run different queries at a later point. This meant that the text processing algorithm and the query processing happened at different point in time.

- So the dictionary algorithm in

O(N)andO(M)could still be used for the text processing. The query processing would use the modified bubble sorting inO(M)andO(k).

A step further was to investigate whether this last time complexity of

O(M)could be reduced.

For that, an extra data structure was created during the text processing.- This data structure is also a dictionary where keys are words lengths in the text, and values are linkedlist of words whose lengths ave the value specified by the key they are associated to. This extra structure adds another

O(M + d)to the space complexity of the text processing phase - d (the number of possible word lengths within the text) is bounded by the longest word in the english dictionary pneumonoultramicroscopicsilicovolcanoconiosis - Length = 45.d <= 45therefored << M.

This data structure will bring down the time complexity of the query processing by allowing the algorithm to only process words that have length within a specified range. If there are V such words, the new time complexity is

O(V).

In some instances V can be way smaller than M. - So the dictionary algorithm in

In summary,

- Text Processing:

O(N) Time Complexity, O(M) space complexity - Query Processing:

O(V) Time Complexity, O(k) space complexity

where,

- N: number of words in text

- M: number of unique words in text

- V: number of unique words with length within the specified range

- k: number of words of interest (the top k most frequent words)

The dictionary of frequencies IDictionary<string, int> and the dictionary of lengths IDictionary<int, LinkedList<string>> can easily be serialized and deserialized.

See <Current Repository>/text-inventorier/Inventorier/

The first step was to implement the core service that would process the text and run the query. Tests were developed to ensure functionality and corner cases (See <Current Repository>/text-inventorier/Inventorier.NUnitTests/)

A range of utility classes are used to fetch the text when a URL is provided, preprocess the text (Handle special characters, lower case all the text, ...), serialize and deserialize the data structures, hashing the text into an ID that can be used later to skip re-processing the text if the processing result is stored in our database.

<Current Repository>/text-inventorier/scripts/misc.bat describes some of the commands used to configure the C# project in Visual Studio Code (VSCode).

See <Current Repository>/text-inventorier/WebApp/

Now that we have functional code, we can wrap it into an API.

First step was to set up a ASP.NET server locally, and integrate with our core service at endpoint <Site URL>/api/corpusinventory. Then integrate with MongoDB to store and retrieve serialized data structures.

A docker image for aspnet:3.1 is available (See <Current Repository>/text-inventorier/WebApp/DockerFile). The image was built, pushed to a remote repo, and Heroku was configured to retrieve and run the docker image.

<Current Repository>/text-inventorier/scripts/push-app-to-backend.bat describes some of the commands used to perform this.

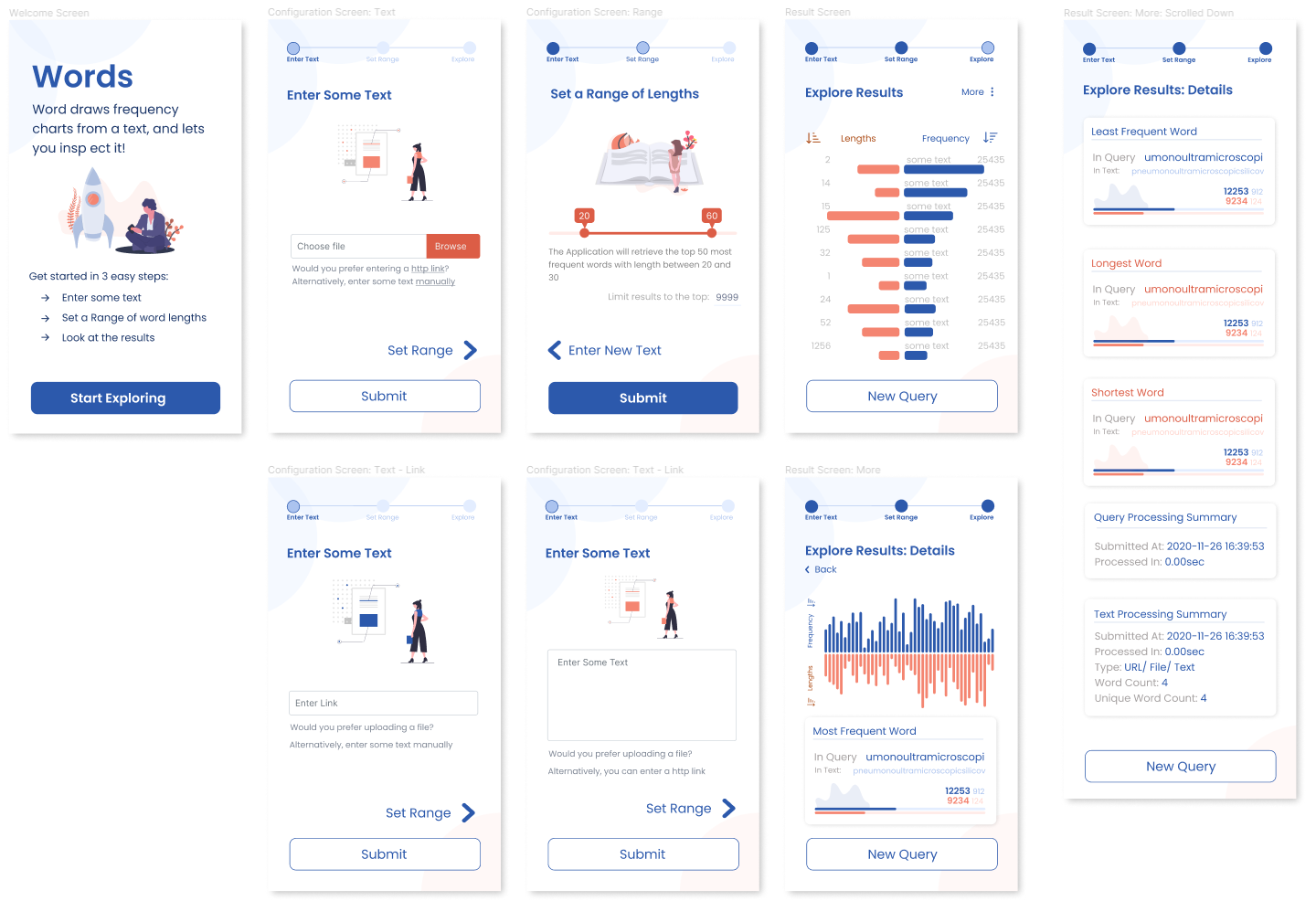

To show how the API could be consumed, a React Client was to be written that would demonstrate this.

Sites like Dribble, Behance, and Google were surveyed to generate ideas for a suitable UI/UX concepts related to our word inventorier application.

Information Architecture was first established as the most important aspect of the UI/UX considerations. Once related questions were answered, wireframes were drafted.

See <Current Repository>/ui-design/

Figma was used to design the UI.

See <Current Repository>/ui/

Setting up tailwindcss with create-react-app was quite the mission.

<Current Repository>/text-inventorier/scripts/setup-ui.bat describes some of the commands used to setup tailwindcss with create-react-app.

See <Current Repository>/ui/

The build files from npm react build were copied to <Current Repository>/text-inventorier/WebApp/wwwroot

Then, the webapp was recompiled, the docker image rebuilt, retagged and pushed to the remote repo.

Heroku redeployed the new image. The application is live at Heroku

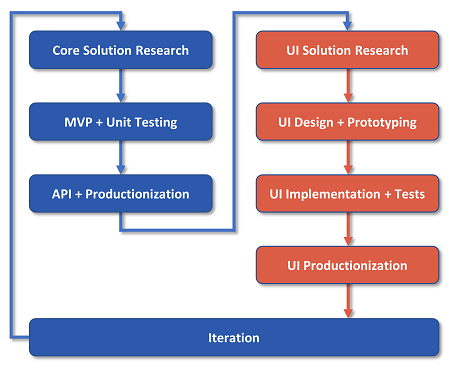

Although the process is described sequentially, there are times were things happen through iteration.

Some files were added to expose the project repo as github pages

<Current Repository>/assets/<Current Repository>/_config.yml

- https://stackoverflow.com/questions/4495241/given-a-file-find-the-ten-most-frequently-occurring-words-as-efficiently-as-pos

- https://www.geeksforgeeks.org/python-count-occurrences-of-each-word-in-given-text-file-using-dictionary/

- https://tailwindcss.com/docs/guides/create-react-app

- https://tailwindcss.com/docs/installation

- https://tailwindcss.com/docs/installation#post-css-7-compatibility-build

- Some docs are available as pdfs under

<Current Repository>/docs/